WhatsApp is rolling out a new Discord-like voice chat feature for large groups, the Meta-owned company announced on Monday. The new feature is designed to be less disruptive […]

Threads now lets users opt out of having their posts appear on Facebook and Instagram

In response to user feedback, Instagram’s Threads will now let users turn off automatic sharing of their Threads posts to other apps, including Instagram and Facebook — a […]

The Trevor Project leaves X as anti-LGBTQ hate escalates

On the one year mark before the next presidential election, LGBTQ organization the Trevor Project announces its leaving X/ Twitter amid escalating hate and online vitriol.

With the 2024 presidential election is just a year away, advocates are doing everything they can do bring attention to the country’s most pressing social justice issues. Today, national LGBTQ youth organization the Trevor Project announced it is leaving X (formerly Twitter) amid growing anti-LGBTQ sentiment, both online and off.

“LGBTQ young people — and in particular, trans and nonbinary young people — have been unfairly targeted in recent years, and that can negatively impact their mental health. In 2023, hundreds of anti-LGBTQ bills have been introduced in states across the country, which can send the message that LGBTQ people are not deserving of love or respect. We have seen this rhetoric transcend politics and appear on social media platforms,” the Trevor Project said in a statement.

Mark Zuckerberg vetoed attempts to address teen mental health on Meta platforms, new lawsuit alleges

On Nov. 9, the organization posted the following message on its page:

The Trevor Project has made the decision to close its account on X given the increasing hate & vitriol on the platform targeting the LGBTQ community — the group we exist to serve. LGBTQ young people are regularly victimized at the expense of their mental health, and X’s removal of certain moderation functions makes it more difficult for us to create a welcoming space for them on this platform. This decision was made with input from dozens of internal and external perspectives; in particular, we questioned whether leaving the platform would allow harmful narratives and rhetoric to prevail with one less voice to challenge them. Upon deep analysis, we’ve concluded that suspending our account is the right thing to do.

A 2023 survey of LGBTQ teens conducted by the Trevor Project found that discrimination and online hate contributes to higher rates of suicide risk reported by LGBTQ young people.

In June, GLAAD marked X as the least safe social media platform for LGBTQ users in its annual analysis of online safety, known as the Social Media Safety index. The report cites continued regressive policies, including the removal of protections for transgender users, and remarks by X CEO Elon Musk as factors in creating a “dangerous environment” for LGBTQ Americans.

In April, a coalition of LGBTQ resource centers nationwide formally left the platform in response to the removal of hateful conduct protections for both LGBTQ and BIPOC users, saying in a joint statement: “2023 is on pace to be a record-setting year for state legislation targeting LGBTQ adults and youth. Now is a time to lift up the voices of those who are most vulnerable and most marginalized, and to take a stand against those whose actions are quite the opposite.”

Protections for the LGBTQ community and reproductive health access are expected to be a flashpoint in the upcoming election cycle, especially amid Republican candidates. At the same time, social media platforms and the online spaces they create, are facing a growing call to address the rise of hate-filled content and misinformation — now exacerbated by astonishing rise of generative AI tools — that disproportionately affect marginalized communities.

The Trevor Project directs any LGBTQ young people looking for a safe space online to its social networking site TrevorSpace.org or its Instagram, TikTok, LinkedIn, and Facebook accounts: “No online space is perfect, but having access to sufficient moderation capabilities is essential to maintaining a safer space for our community.”

Zuckerberg shot down multiple initiatives to address youth mental health online, claims a new lawsuit

Newly unsealed documents in a lawsuit against Meta outline a history of rejecting opportunities to address youth mental wellbeing.

Still embroiled in lawsuits over the company’s slow move to address its platforms’ effects on young users, Meta CEO Mark Zuckerberg is now under fire for reportedly blocking attempts to address Meta’s role in a worsening mental health crisis.

According to newly unsealed court documents in a Massachusetts case against Meta, Zuckerberg was made aware of ongoing concerns about user mental wellbeing in the years prior to the Wall Street Journal investigation and subsequent Congressional hearing. The CEO repeatedly ignored or shut down suggested actions by Meta’s top executives, including Instagram CEO Adam Mosseri and Facebook’s president of global affairs Nick Clegg.

Meta’s moderation failures incite hate and human rights abuses, according to Amnesty International

Specifically, Zuckerberg passed on a 2019 proposal to remove popular beauty filters from Instagram, which many experts connect to worsening self image, unreachable standards of beauty, and perpetuated discrimination of people of color. Despite support for the proposal among other Instagram heads, the 102-page court document alleges, Zuckerberg vetoed the suggestion in 2020, saying he saw a high demand for the filters and “no data” that such filters were harmful to users. A meeting of mental health experts was allegedly cancelled a day before a meeting on the proposal was scheduled to take place.

The documents also include a 2021 exchange between Clegg and Zuckerberg, in which Clegg forwarded a request from Instagram’s wellbeing team asking for an investment of staff and resources for teen wellbeing, including a team to address areas of “problematic use, bullying+harassment, connections, [and Suicide and Self-Injury (SSI)],” Insider reports.

While Clegg reportedly told Zuckerberg that the request was “increasingly urgent,” Zuckerberg ignored his message.

The Massachusetts case is yet another legal hit for Meta, after being lambasted by state governments, parent coalitions, mental health experts, and federal officials for ignoring internal research and remaining complicit in social media’s negative effect on young users.

On Oct. 25, a group of 41 states and the District of Columbia sued Meta for intentionally targeting young people using its “infinite scroll” and algorithmic behavior and pushing them towards harmful content on platforms like Instagram, WhatsApp, Facebook, and Messenger.

In 2022, Meta faced eight simultaneous lawsuits across various states, accusing Meta of “exploiting young people for profit” and purposefully making its platforms psychologically addictive while failing to protect its users.

Meta’s not the only tech or social media giant facing potential legal repercussions for its role in catalyzing harmful digital behavior. The state of Utah’s Division of Consumer Protection (UDCP) filed a lawsuit against TikTok in October, claiming the app’s “manipulative design features” negatively effect young people’s mental health, physical development, and personal life. Following a similar case from a Seattle public school district, a Maryland school district filed a lawsuit against nearly all popular social platforms in June, accusing the addictive properties of such apps of “triggering crises that lead young people to skip school, abuse alcohol or drugs, and overall act out” in ways that are harmful to their education and wellbeing.

Since the 2021 congressional hearing that put Meta’s youth mental health concerns on public display, the company has launched a series of new parental control and teen safety measures, including oversight measures on Messenger and Instagram intended to protect young users from unwanted interactions and reduce their screen time.

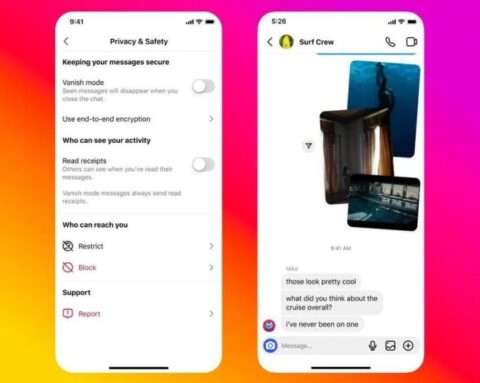

Instagram is finally testing a feature to let you turn off read receipts for DMs

Instagram is finally testing a feature that will let users turn off read receipts for Instagram DMs. This way, even if you have read someone’s message, they won’t […]

New Facebook Stories API helps creators share directly from third-party desktop or web apps

Meta is introducing a new API that makes it easier to create and share a Facebook Story directly from a third-party desktop or web app. The social networking […]

Meta says users and businesses have 600 million chats on its platforms every day

Meta is doubling down on business messages for revenue generation, as Mark Zuckerberg indicated during the company’s earnings call for Q3 2023. Zuckerberg said that the company is […]

Zuckerberg says Threads has a ‘good chance’ of reaching 1 billion users in a few years

Meta said today that its text-based social network Threads has under 100 million monthly users three months after its launch. Mark Zuckerberg noted during the company’s latest earnings […]

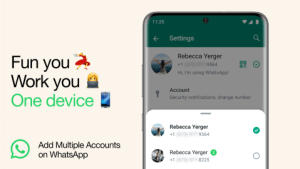

WhatsApp will now let log into two accounts simultaneously

WhatsApp announced today that it is rolling out the ability for users to use two accounts simultaneously. That means you can switch between two accounts in the same […]

Meta Connect 2023: Everything you need to know about Quest 3 VR, smart glasses

Meta’s annual Connect conference started today, and this means lots of new hardware. Are you ready for an update on Meta Quest 3? Didn’t have time to tune in […]