The White House just announced an executive order on AI regulation, which means major players like Open AI, Google, Microsoft and other prominent AI players must abide by the new legislation.

The White House just announced a thunderous executive order tackling AI regulation. These directives are the “strongest set of actions any government in the world has ever taken” to protect how AI affects American citizens, according to White House Deputy Chief of Staff Bruce Reed.

The Biden administration has been working on plans to regulate the untethered AI industry. The order builds on the Biden-Harris blueprint for an AI Bill of Rights as well as voluntary commitments from 15 leading tech companies to work with the government for safe and responsible AI development.

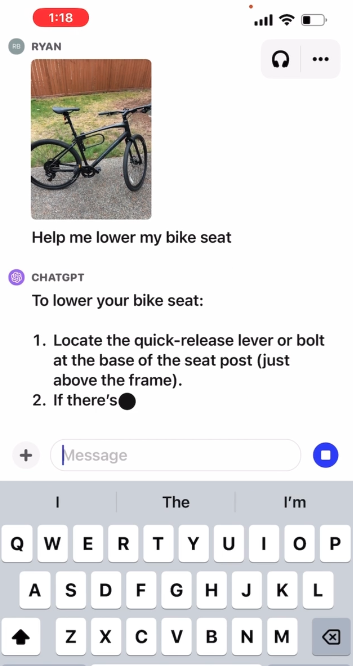

Instead of waiting for Congress to pass its own legislation, the White House is storming ahead with an executive order to mitigate AI risks while capitalizing on its potential. With the widespread use of generative AI like ChatGPT, the urgency to harness AI is real.

White House AI executive order: 10 key provisions you need to know

What does the executive order look like? And how will it affect AI companies? Here’s what you need to know.

1. Developers of powerful AI systems (e.g., OpenAI, Google and Microsoft) must share the results of their safety tests with the federal government

In other words, while a prominent AI company is training its model, it is required to share the results of red-team safety tests before they are released to the public. (A red team is a group of people that test the security and safety of a digital entity by posing as malicious actors.)

According to a senior administration official, the order focuses on future generations of AI models, not current consumer-facing tools like ChatGPT. Furthermore, companies that would be required to share safety results are those that meet the highest threshold of computing performance. “[The threshold] is not going to catch AI systems trained by graduate students or even professors. This is really catching the most powerful systems in the world,” said the official.

2. Red-team testing will be held to high standards set by the National Institute of Standards and Technology

Homeland Security and the Departments of Energy will also work together to determine whether AI systems pose certain risks in the realm of cybersecurity as well as our chemical, biological, radiological, and nuclear infrastructure.

3. Address the safety of AI players using models for science and biology-related projects

New standards for “biosynthesis screening” are in the works to protect against “dangerous biological materials” engineered by AI.

4. AI-generated content must be watermarked

The Department of Commerce will roll out guidance for ensuring all AI-generated content — audio, imagery, video, and text — is labeled as such. This will allow Americans to determine which content is created by a non-human entity, making it easier to identify deceptive deepfakes.

5. Continue building upon the ‘AI Cyber Challenge’

For the uninitiated, the AI Cyber Challenge is a Biden administration initiative that seeks to establish a high-level cybersecurity program that strengthens the security of AI tools, ensuring that vulnerabilities are fixed.

6. Lean on Congress to pass “bipartisan data privacy legislation”

The executive order is a message to Congress to speed things up. Biden is calling on lawmakers to ensure that Americans’ privacy is protected while prominent AI players train their models. Children’s privacy will be a primary focus.

7. Dig into companies’ data policies.

The White House says that it will evaluate how agencies and third-party data brokers collect and use “commercially available” information, meaning public datasets. Some “personally identifiable” data is available to the public, but that doesn’t mean AI players have free rein to use this information.

8. Tamp down on discrimination exacerbated by AI

Guidance will be rolled out to landlords, federal contractors, and more to reduce the possibility of bias. On top of that, the government will introduce best practices to address discrimination in AI algorithms. Plus, the Biden administration will address the usage of AI in sentencing regarding the criminal justice system.

9. Attract top global talent

As of today, the ai.gov site has a portal for applicants seeking AI fellowships and job opportunities in the U.S. government. The order also seeks to update visa criteria for immigrants with AI expertise.

10. Support workers vulnerable to AI developments

The Biden administration will support workers’ collective bargaining influence by developing principles and best practices to protect workers against potential harms like surveillance, job replacement, and discrimination. The order also announced plans to produce a report on AI’s potential for disrupting labor markets.

Mashable will be down in D.C. to get more information about how the new AI executive order will affect major players like Open AI, Google, and Microsoft as well as the average American citizen. Stay tuned for our coverage on this matter.