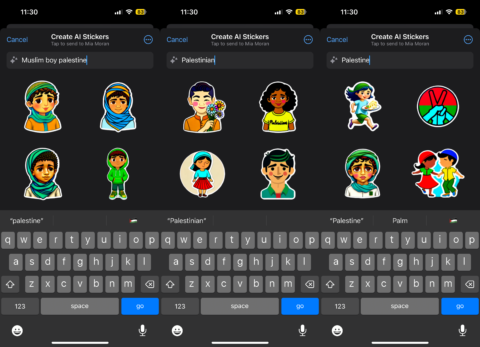

When users search for “Palestine” on Meta-owned WhatsApp, the AI-generated stickers return biased images.

Earlier this year, Meta-owned WhatsApp started testing a new feature that allows users to generate stickers based on a text description using AI. When users search “Palestinian,” “Palestine,” or “Muslim boy Palestine,” the feature returns a photo of a gun or a boy with a gun, a report from The Guardian shows.

According to The Guardian‘s Friday report, search results vary depending on which user is searching. However, prompts for “Israeli boy” generated stickers of children playing and reading, and even prompts for “Israel army” didn’t generate photos of people with weapons. That, compared to the images generated from the Palestinian searches, is alarming. A person with knowledge of the discussions that The Guardian did not name told the news outlet that Meta’s employees have reported and escalated the issue internally.

“We’re aware of this issue and are addressing it,” a Meta spokesperson said in a statement to Mashable. “As we said when we launched the feature, the models could return inaccurate or inappropriate outputs as with all generative AI systems. We’ll continue to improve these features as they evolve and more people share their feedback.”

It is unclear how long the differences spotted by The Guardian persisted, or if these differences continue to persist. For example, when I search “Palestinian” now, the search returns a sticker of a person holding flowers, a smiling person with a shirt that says what looks like “Palestinian,” a young person, and a middle-aged person. When I searched “Palestine,” the results showed a young person running, a peace sign over the Palestinian flag, a sad young person, and two faceless kids holding hands. When you search “Muslim boy Palestinian,” the search shows four young smiling boys. Similar results are shown when I searched “Israel,” “Israeli,” or “Jewish boy Israeli.” Mashable had multiple users search for the same words and, while the results differed, none of the images from searches of “Palestinian,” “Palestine,” “Muslim boy Palestinian,” “Israel,” “Israeli,” or “Jewish boy Israeli” resulted in AI stickers with any weapons.

There are still differences, though. For instance, when I search “Palestinian army,” one image shows a person holding a gun in a uniform, while three others are just people in uniform; when I search “Israeli army,” the search returns three people in uniform and one person in uniform driving a military vehicle. Searching for “Hamas” returns no AI stickers. Again, each search will differ depending on the person searching.

This comes at a time in which Meta has come under fire for allegedly shadowbanning pro-Palestinian content, locking pro-Palestinian accounts, and adding “terrorist” to Palestinian bios. Other AI systems, including Google Bard and ChatGPT, have also shown significant signs of bias about Israel and Palestine.